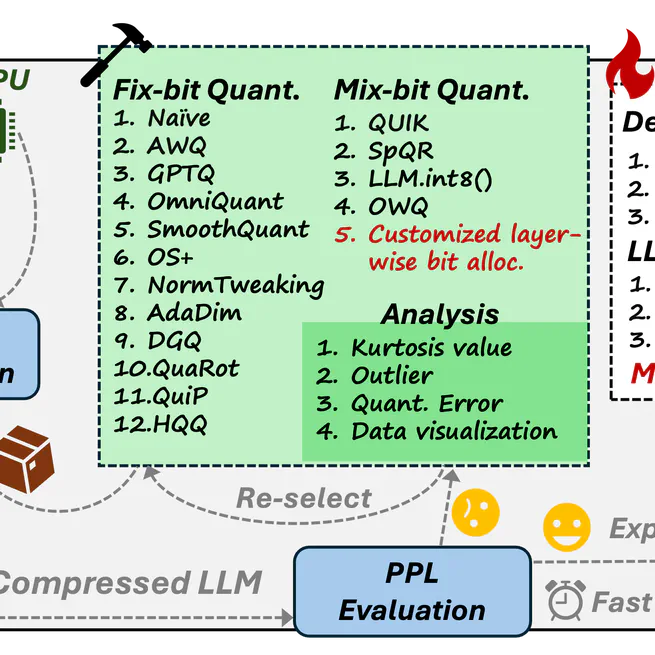

LLMC

An off-the-shell tool designed for compressing LLM, leveraging state-of-the-art compression algorithms to enhance efficiency and reduce model size without compromising performance

Nov 26, 2024

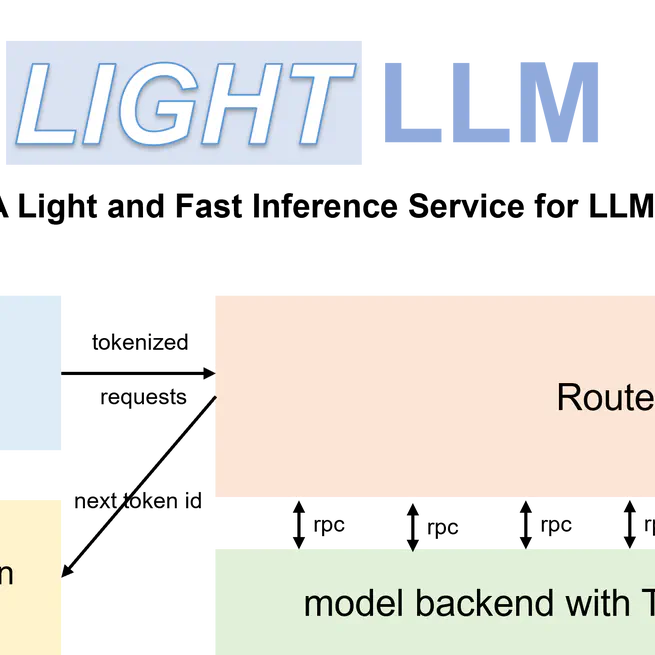

LightLLM

A Python-based LLM inference and serving framework, notable for its lightweight design, easy scalability, and high-speed performance.

Jan 27, 2024