LightLLM

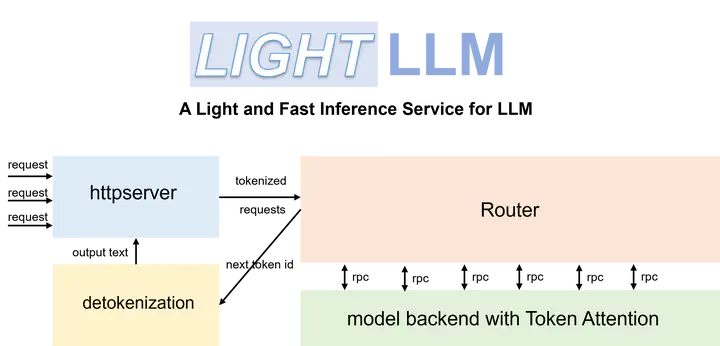

LightLLM is a Python-based LLM (Large Language Model) inference and serving framework, notable for its lightweight design, easy scalability, and high-speed performance. LightLLM harnesses the strengths of numerous well-regarded open-source implementations, including but not limited to FasterTransformer, TGI, vLLM, and FlashAttention.

Features

- Tri-process asynchronous collaboration: tokenization, model inference, and detokenization are performed asynchronously, leading to a considerable improvement in GPU utilization.

- Nopad (Unpad): offers support for nopad attention operations across multiple models to efficiently handle requests with large length disparities.

- Dynamic Batch: enables dynamic batch scheduling of requests

- FlashAttention: incorporates FlashAttention to improve speed and reduce GPU memory footprint during inference.

- Tensor Parallelism: utilizes tensor parallelism over multiple GPUs for faster inference.

- Token Attention: implements token-wise’s KV cache memory management mechanism, allowing for zero memory waste during inference.

- High-performance Router: collaborates with Token Attention to meticulously manage the GPU memory of each token, thereby optimizing system throughput.

- Int8KV Cache: This feature will increase the capacity of tokens to almost twice as much. only llama support.