Ruihao Gong

About Me

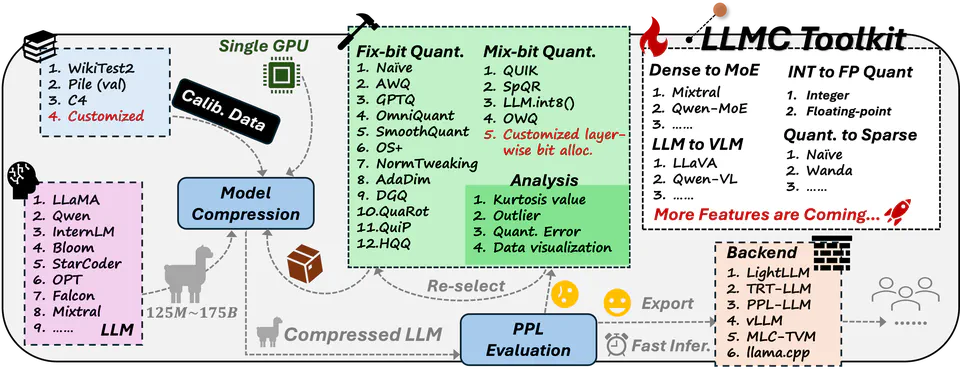

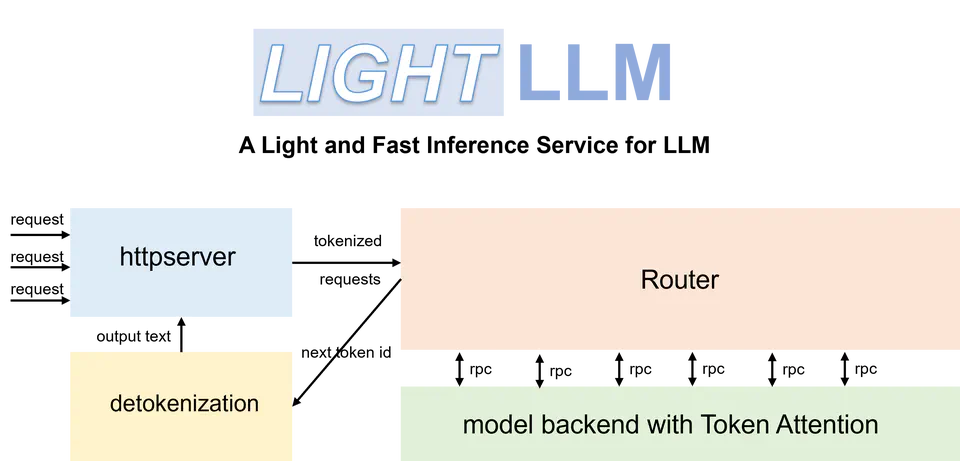

Ruihao Gong focuses on the system and algorithms for accelerating the industry model production, model deployment and model efficiency. His research interests include model quantization, model sparsity, hardware-friendly neural networks for various hardwares such as cloud servers and mobile/egde devices, building systems for large model training and inference, and various applications such as smart city and L2/L4 autonomous driving, personal AI assistants.

Featured Publications

Featured Projects

Award

Winner of Low Power Computer Vision Challenge

IEEE ∙

August 2023

2023 IEEE UAV Chase Challenge Award

IEEE ∙

August 2023

Winner of Low Power Computer Vision Challenge

IEEE ∙

August 2021

Tencent Rhino-Bird Elite Training Program

Tencent ∙

July 2020

SenseTime Future Star Talents

SenseTime ∙

December 2019

National Scholarship

Ministry of Education ∙

October 2019

CCF Outstanding Undergraduate Award

China Computer Federation (CCF) ∙

October 2018